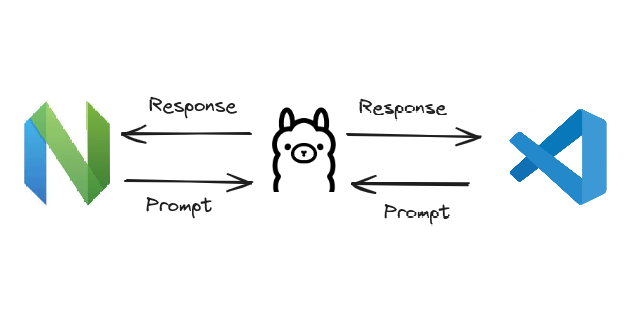

Self Hosting LLMs using Ollama

Ollama provides an interface to self-host and interact with open-source LLMs (Large Language Models) using its binary or container image. Managing LLMs using Ollama is like managing container lifecycle using container engines like docker or podman. Ollama commands pull and run are used to download and execute LLMs respectively, just like the ones used to manage containers with podman or docker. Tags like 13b-python and 7b-code are used to manage different variations of an LLM....